The internet is an ugly, ugly place.

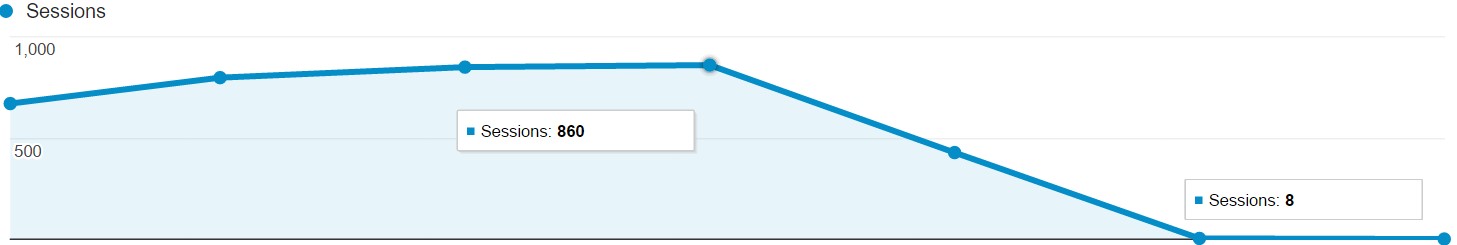

I know this not because I saw a shocking video, but because of this graph.

This graph belongs to a client of mine who was suffering from a Google penalization. And no, this wasn’t after a major update like Panda, just a simple detection of conflicting signals from duplicate content on their website.

Sure, this company saw something blow up and came to us to fix the damage.

But the ugly part is this: so many companies have websites that are telling search engines they do not want to be found and don’t even know it.

Every website is sending thousands of signals to Google right now, with many failing to do these three things:

1) Not send conflicting signals

2) Have an optimized, easily crawlable site structure

3) Perform optimally by looking at speed testers and GPSI (Google Page Speed Insights)

Doing these three things correctly is the best place to start if your goal is to get large amounts of organic traffic in your website’s front door. Kind of like turning around your “Closed” sign to say “Open for Business” instead.

And sending the right signals doesn’t have to be rocket science when the high level strategy behind this can be understood by anyone. To prove this to you, here are few of the common mistakes we’ve seen from companies recently.

Not sending conflicting signals- the common mistakes

How can you know if you’re sending conflicting signals? Well, we use these three essential tools to find the most of the potentially harmful conflicting signals.

- Google Search Console

- ScreamingFrog

- DeepCrawl

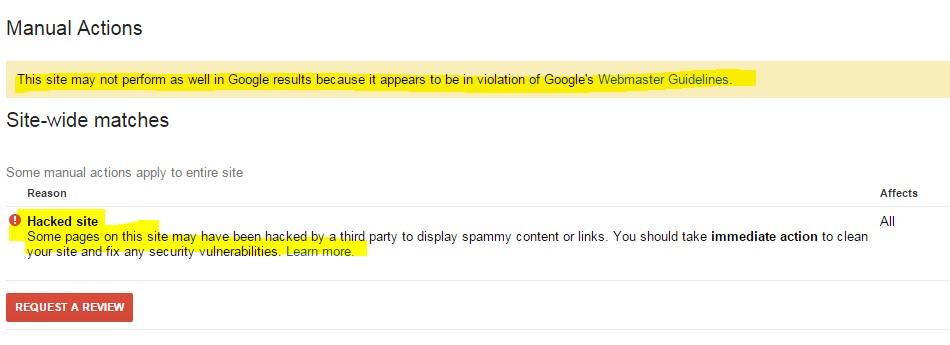

Google Search Console gives you massive data on your site, including BIG mistakes. Kind of like this:

Having your site flagged as “hacked” by Google is the last thing you want to happen. In this case, the company had unwittingly copied their content to multiple sites not realizing it would be penalized as “duplicate” content. Google doesn’t take spam lightly because they’ve had to punish those that have tried to game the system time after time.

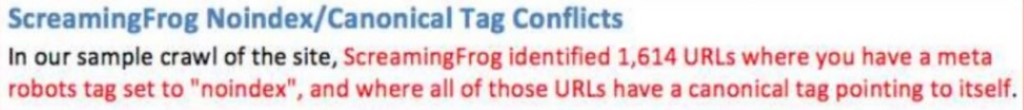

Finding tag conflicts in ScreamingFrog is extremely easy to do.

Here, we found 1,614 URLs in our client’s website that there were telling Google “Yes, index this page” while saying “No, do not index this page” at the same time.

Conflicting signals like this confuse Google, and lead to a bad time for your website.

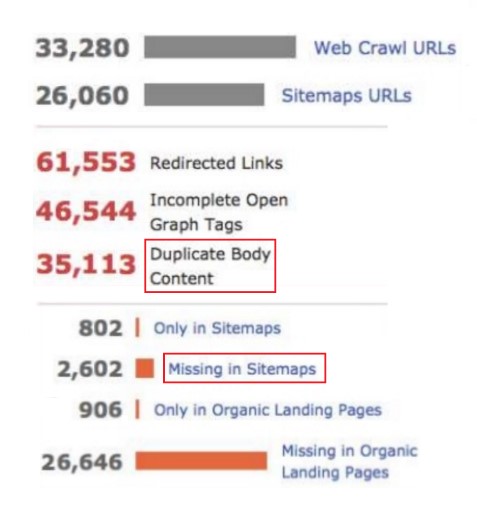

By using data from Deep Crawl we can see a couple of major errors on this website.

35,113 instances of duplicate body content is doubtlessly going to punish this website’s ranking.

Having an Optimized Site Structure- what not to do

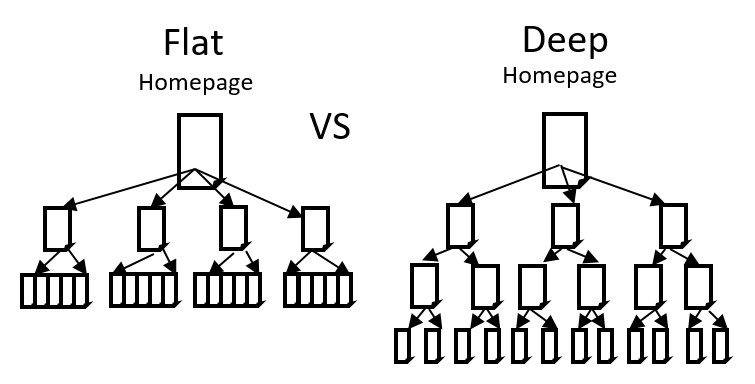

1) As we’ve learned more about SEO over the years, its become clear that deep architecture most of the time is better for SEO than flat. Flat architecture effectively reduces your ability to focus on important topics as well as creating a less enjoyable experience for visitors.

2) Use a variation dropdown box to show multiple versions of the same product or service.

3) Avoid duplicating URLs in your site’s structure, only small amounts of duplication are acceptable.

Your Website Performance- How to Optimize

Both users and Google are looking at your site’s load speed.

If you run some tests and find your page speed sub-optimal, you can start by doing these four things:

- Optimizing hi-res images/videos

- File compressing with Gzip

- Use minify (removing all unnecessary code)

- Remove redirects

- Do not have more than 5 fonts and know where you’re importing them from

These points are a good place to start, but it still might not be enough to get your page speed where it should be. If that is the case, looking at 3rd party servers delivering slow page elements to your website is the next step.

Fortunately for you, when you make a Google search you only see the websites that have already flipped their sign around to show “open for business”. If your site is still closed or hasn’t completely opened: